How can we… use AI to support clinical decision-making in intensive care?

19 February 2026

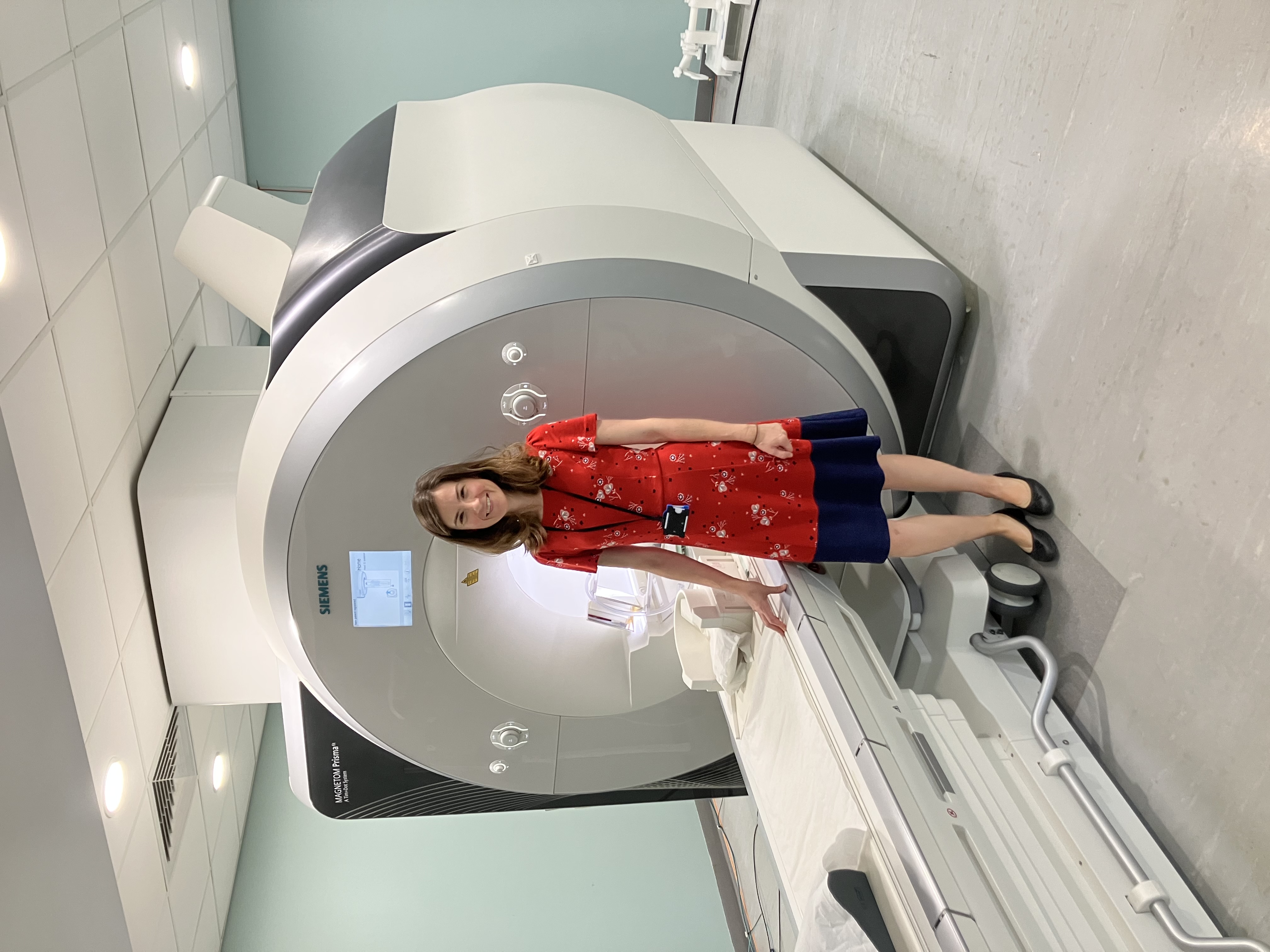

Intensive care units (ICUs) treat the sickest patients in the hospital and are among the most complex and resource-intensive environments in modern healthcare. Teams of specialists must make high-stakes decisions under intense time pressure, where even small delays or misjudgements can have major consequences for patient survival and recovery. At the same time, ICUs generate vast amounts of data, including continuous monitoring, ventilator settings, medication infusions, and laboratory tests. In my daily work in intensive care, I see how much valuable information is produced, but how little of it is actually used in clinical decision-making. Much of it remains fragmented across systems and is used only in limited ways at the bedside. As clinicians can process only a fraction of this information, AI-driven methods offer a way to support more informed decisions, with the potential to improve resource use, reduce complications, and save lives.